Unmasking Propaganda on Messaging Apps

By examining millions of political discussions on Telegram, scientists from Switzerland and Germany have identified the digital fingerprints of coordinated disinformation, and developed a tool for more effective moderation.

In countries with restricted press freedom, social media channels, and particularly messaging apps such as WhatsApp, Telegram, and Signal often serve as the first and only source of news. Yet with the rise of artificial intelligence and increased global conflicts, the risk of encountering fake news and orchestrated disinformation campaigns is growing rapidly.

Moderators under pressure

On such messaging platforms, verifying content is left to human moderators. There is no independent body for fact-checking. As a result, moderators must define their own rules and manually remove harmful or inappropriate posts. It’s an arduous task, often psychologically taxing.

A new weapon against disinformation

A recently published study by researchers from EPFL in Lausanne, in collaboration with Germany’s Max Planck Institute and Ruhr University Bochum, presents a breakthrough in detecting coordinated propaganda on messaging apps.

One of the most notable characteristics of propaganda accounts is that they post messages with the same wording in different places, sometimes across various channels. While regular accounts post unique messages, propaganda accounts form large networks that repeatedly disseminate the same content.

The researchers leveraged this repetition to design an automated detection system capable of identifying disinformation patterns. Their analysis covered 13.7 million comments from 13 Telegram channels, focusing on political and current affairs discussions. Around 1.8 per cent of all comments were classified as propaganda. The majority originated from pro-Russian networks, with a smaller proportion being pro-Ukrainian. Remarkably, the algorithm was able to detect propaganda based on a single comment with an accuracy rate of 97.6 per cent, which is 11.6 per cent higher than human moderators.

Patterns of propaganda accounts

According to the study, propaganda accounts tend not to start discussions but respond to existing comments containing keywords such as “Putin” or “Zelenskyy”. These accounts frequently repost identical messages across multiple threads or even different channels, while ordinary users post unique content.

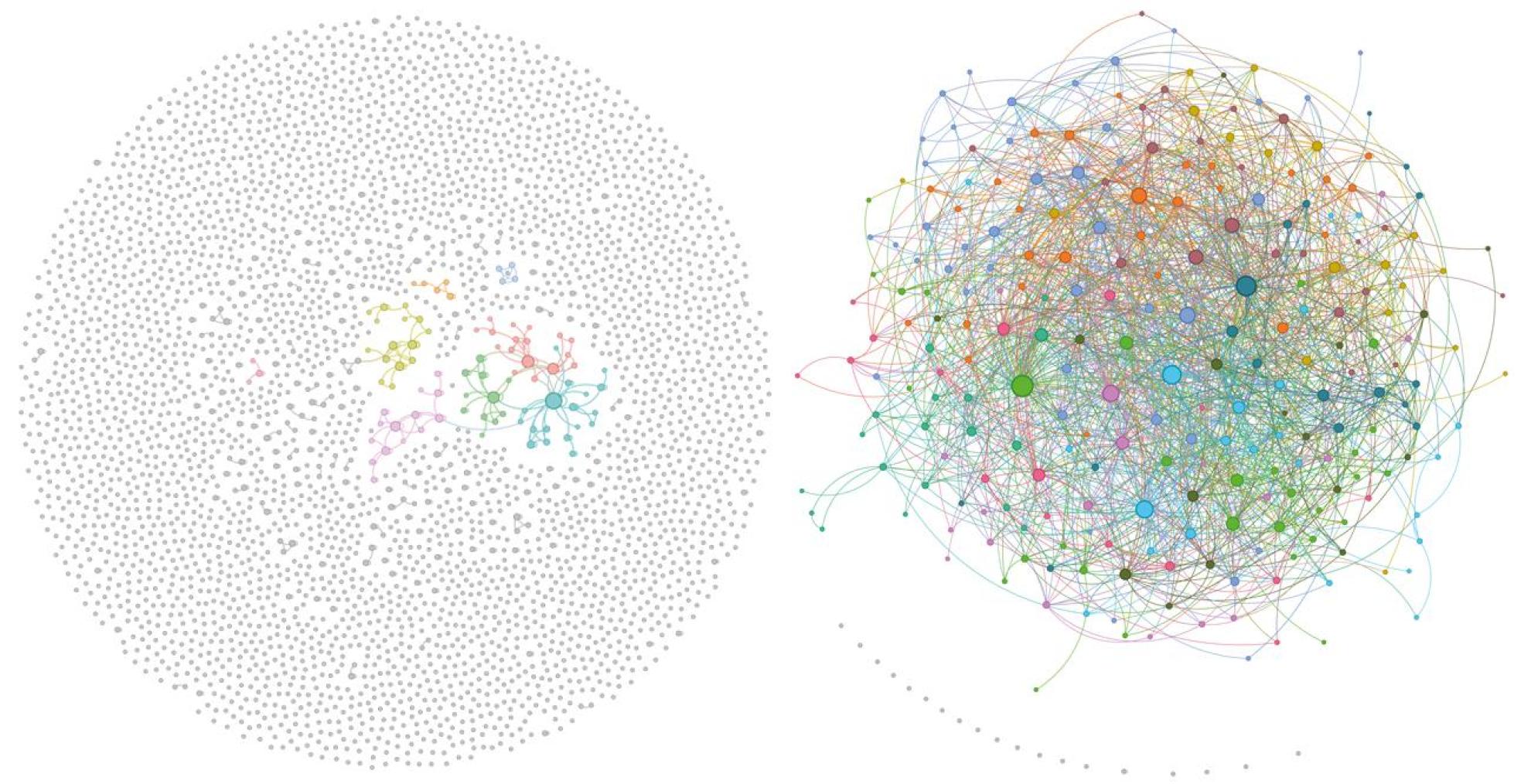

Each dot represents a Telegram account; each line connects accounts that have shared an identical message. The difference is striking: ordinary users (left) post independently, while propaganda accounts (right) form tight networks that recycle the same content.

Supporting human moderation

The research team hopes their tool will lighten the load for moderators, who cannot be available around the clock. Continuous exposure to manipulative or hostile content can lead to significant mental strain. By integrating automated systems, platforms could not only improve content moderation efficiency but also protect the well-being of those tasked with safeguarding online discourse.